Research Projects

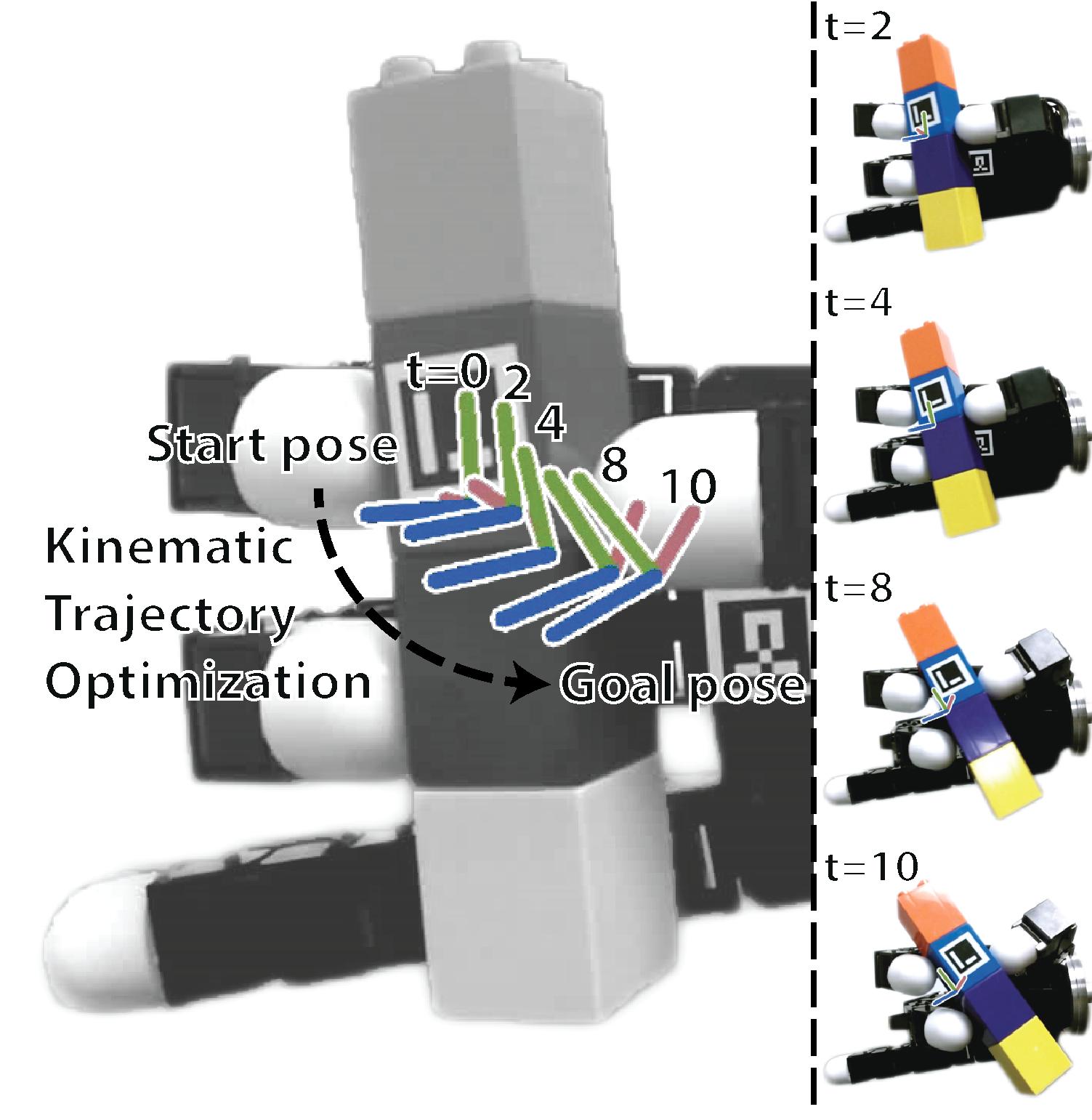

In-Hand Manipulation

We investigate the problem of a robot autonomously moving an object relative to its hand. This project focuses on multi-fingered, in-hand manipulation of novel objects. Objects from the YCB dataset are used with the Allegro robotic hand to verify approaches. Benchmarking schemes are also introduced to compare with other methods.

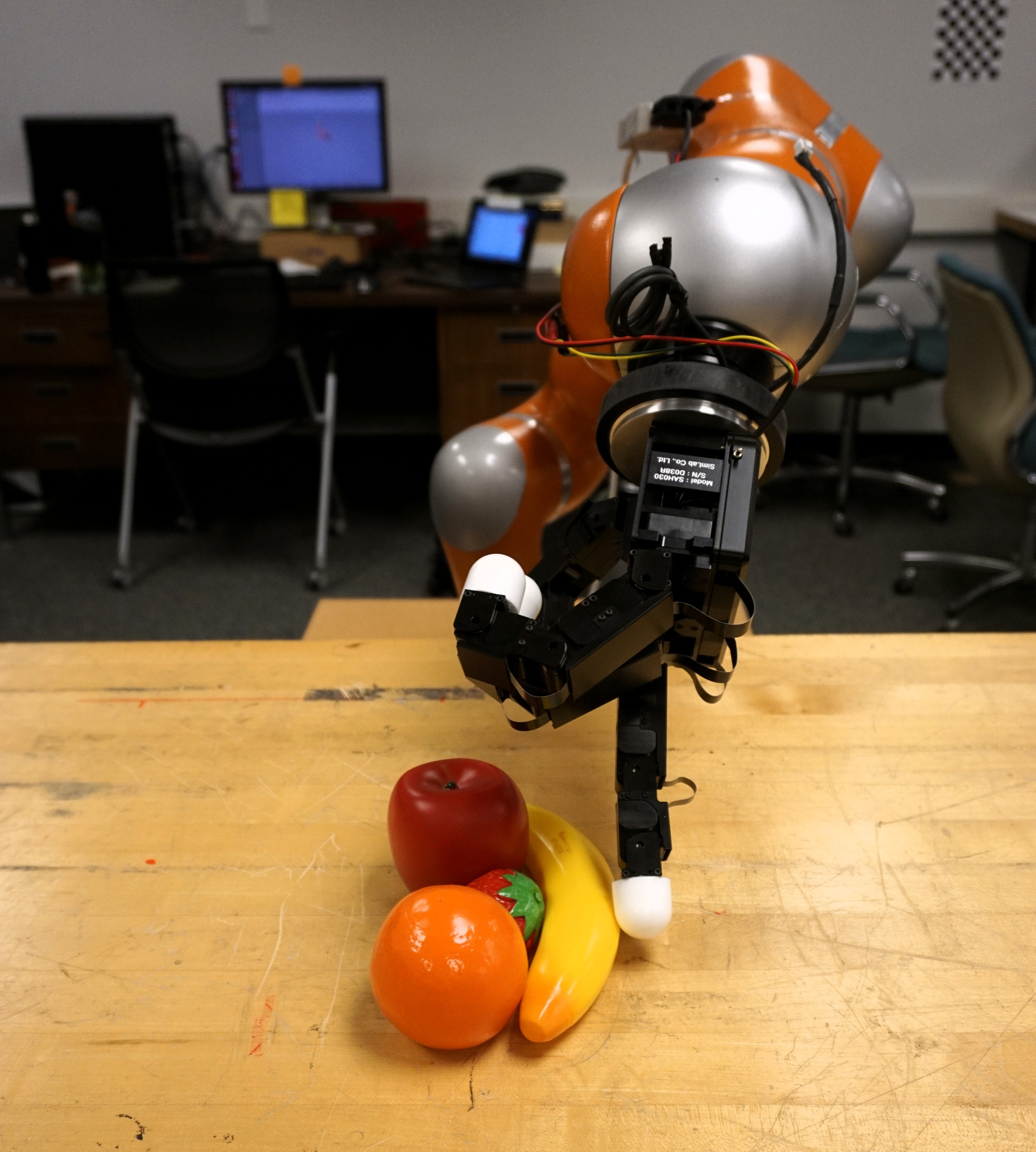

Multi-fingered Grasp Planning

We propose a novel approach to multi-fingered grasp planning leveraging learned deep neural network models. We train a convolutional neural network to predict grasp success as a function of both visual information of an object and grasp configuration. We can then formulate grasp planning as inferring the grasp configuration which maximizes the probability of grasp success. Our work is the first to directly plan high quality multi-fingered grasps in configuration space using a deep neural network without the need of an external planner. We validate our inference method performing both multi-finger and two-finger grasps on real robots.

Tactile Perception and Manipulation

We are interested in enabling robots to use the sense of touch–tactile sensing–to improve both their perception and manipulation of the world. Publications:

Magnetic Manipulation

In cooperation with Dr. Jake Abbott and the Utah TeleRobotics Lab we investigate how advances in autonomous robot manipulation can be applied to the domain of magnetic manipulation for medical devices, micro-robots, and other applications.

Manipulation of Object Collections

The research goal of this project is to enable robots to manipulate and reason about groups of objects en masse. The hypothesis of this project is that treating object collections as single entities enables data-efficient, self-supervised learning of contact locations for pushing and grasping grouped objects. This project investigates a novel neural network architecture for self-supervised manipulation learning. The convolutional neural network model takes as input sensory data and a robot hand configuration. The network learns to predict as output a manipulation quality score for the given inputs. When presented with a novel scene, the robot can perform manipulation inference by evaluating the current sensory data, while directly optimizing the manipulation score predicted by the network over different hand configurations. The project supports the development of experimental protocols and the collection of associated data for dissemination to stimulate research activity in manipulation of object collections.

Non-prehensile Manipulation

While grasping is the dominant form of manipulation for robots, pushing provides an interesting alternative in many contexts. In particular when interacting with previously unseen objects, pushing is less likely to cause dramatic failures such as dropping and breaking objects compared with grasping.